.NET 8 Native AOT support in AWS Lambda functions

In February 2024, AWS announced support for the .NET 8 runtime for Lambda functions, alongside Native Ahead-of-Time (AOT) compiled Lambda functions. The .NET 8 runtime introduces several enhancements in the API, performance optimizations, improved Native AOT compilation, and introduces C# 12. According to benchmarks provided by AWS, .NET Native AOT compiled Lambda functions offer up to 86% faster cold start times.

.NET Native AOT

For those unfamiliar with the term, .NET Native Ahead-of-Time (AOT) compilation is one of the latest and most significant performance improvements on the .NET platform.

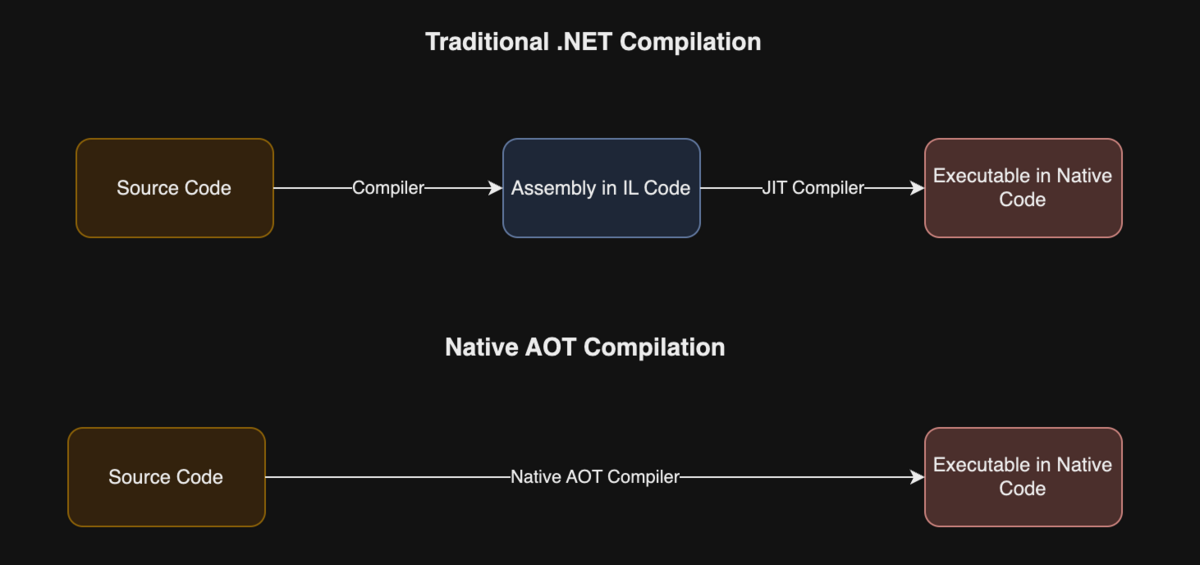

Traditionally, programs written in .NET languages undergo a two-step compilation process. First, the code written in a .NET language such as C# is compiled into assemblies that contain Intermediate Language (IL) code. When the program executes, the .NET CLR (Common Language Runtime) uses the JIT (Just In Time) compiler to translate the IL code into native code that runs directly on the CPU. This process incurs performance costs, and this is where AOT compilation shines.

In contrast to the traditional .NET compilation process, Native AOT compilation condenses this into a single step. It compiles the .NET code directly into native code, resulting in a single executable that does not rely on the .NET Runtime and executes directly on the CPU. The diagram below illustrates the comparison between the two methods.

Benefits of .NET Native AOT

- Improved performance:

- Dramatically faster startup times compared to traditional .NET applications.

- Overall performance is enhanced due to the absence of JIT compilation at runtime.

- Reduced disk footprint.

- AOT compilation includes only the code and external dependencies in the executable.

- Benefits include smaller container images and faster deployment times.

- Reduced memory consumption

- Native AOT compiled apps consume less memory, enhancing scalability

Limitations of .NET Native AOT compilation

Despite improvements in .NET Native AOT compilation, there are still some limitations:

- Reflection and dynamic loading of assemblies are not supported

- Requires assembly trimming

- Packing into a self-contained executable may introduce .NET API incompatibilities

- Cross OS compilation is not supported (We’ll get to that later)

.NET Native AOT in AWS Lambda functions

Understanding the benefits,it becomes clear that in a serverless environment where the app starts on each request/event the native AOT compiled Lambda functions can provide a significant performance boost. The .NET 8 Runtime for AWS Lambda comes with some improvements itself on top of the .NET 8 Native AOT enhancements. It’s based on Amazon Linux 2023 minimal container image. It offers a smaller deployment footprint and updated libraries. Let’s delve into creating a .NET 8 Native AOT Lambda function.

Prerequisites

Docker

Docker is essential because of the limitation regarding Cross OS Compilation. Native AOT Lambdas must be compiled on a machine running the Amazon Linux 2023 environment. Docker solves this problem and the whole process is streamlined, leveraging the .NET CLI, Amazon Tools and SAM.

.NET 8 SDK

In order to build the code we need to install the .NET 8 SDK

Amazon.Lambda.Tools and Amazon.Lambda.Templates

These are valuable extensions for the .NET CLI, facilitating the creation of Lambda .NET projects from templates and handling build and deployment tasks.

SAM

In our example, we’ll utilize Amazon SAM for local testing, invocation, and deployment of the Lambda function.

Getting Started

Machine setup

To begin, let’s ensure our system is equipped with the necessary tools to create the C# project and generate the code. Make sure Docker, .NET 8 SDK, and SAM CLI are installed. You can download them from the following links:

Docker: www.docker.com/products/docker-desktop/ SAM CLI: docs.aws.amazon.com/serverless-application-model/latest/developerguide/install-sam-cli.html .NET 8 SDK: dotnet.microsoft.com/en-us/download/dotnet/8.0

The following commands will install some necessary extensions for the .NET CLI

dotnet tool install -g Amazon.Lambda.Tools dotnet new -i Amazon.Lambda.Templates

Initialize the project with SAM CLI

Next, use SAM CLI to generate the C# code for your Lambda function by running the following command:

sam init

SAM will create the folder structure, the template and generate the C# code for our function. It will guide you through an interactive setup process. Follow these steps when prompted:

- Choose to use AWS Quick Start Templates

- Select the Hello World Example template

- When asked, choose to not use the most popular runtime and select dotnet8 instead from the following prompt

- Select Zip package type

- Select the Hello World Example using native AOT starter template

- For the rest of the prompts we can select the default values and choose a name for our project

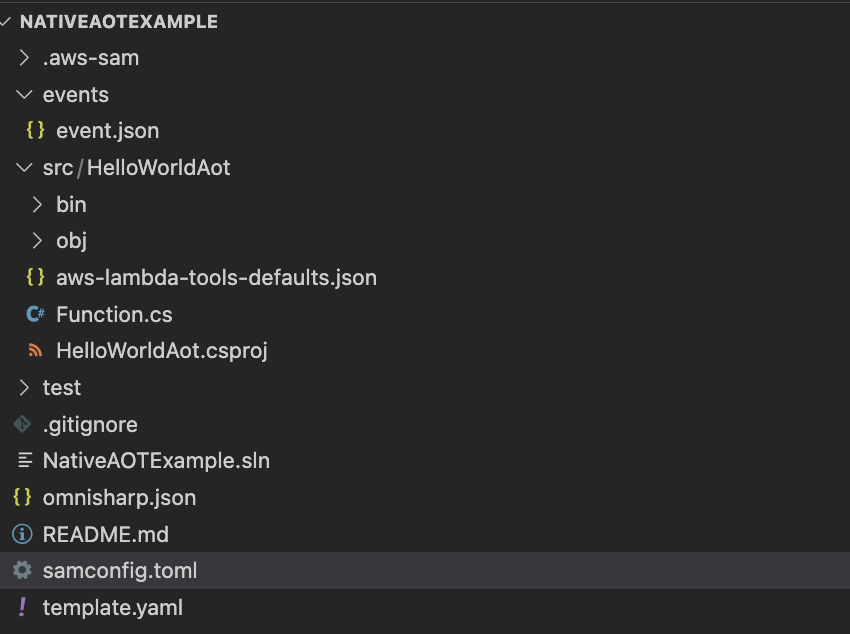

After completing these steps, SAM CLI will create the folder structure and generate the necessary files for your project.

Build the code

Now that we’ve set up our project, we’re ready to build, invoke locally, or deploy our Lambda function. It’s important to note that although Docker handles cross-OS compilation, the architecture of our build machine must match the Lambda function’s architecture. The default architecture in the template is X86_64, so adjust if necessary for your system.

Ensure Docker is running and execute the following commands in your console:

cd ./NativeAOTExample # Navigate to your project directory sam build # Build the Lambda function code

The initial build might take some time as Docker fetches the AL2003 image to compile the code. Once completed, you should find the build artifacts in the designated folder.

Invoke the Lambda

To invoke the Lambda function locally, use the following command:

sam local invoke -d 5858 HelloWorldAotFunction -e events/event.json

For Mac users, recent Docker versions have introduced an incompatibility with SAM. If you encounter issues, use the following command instead:

DOCKER_HOST=unix://$HOME/.docker/run/docker.sock sam local invoke -d 5858 HelloWorldAotFunction -e events/event.json

Deploy

To deploy your Lambda function, use SAM CLI with the following command:

sam deploy --guided

This command initiates an interactive session where you can configure various deployment aspects such as the stack name, AWS region, and whether SAM should create an IAM role. Default values can be sourced from ~/.aws/config, ~/.aws/credentials, and ./NativeAOTExample/samconfig.toml files.

SAM CLI can be seamlessly integrated into most CI/CD pipelines. For detailed integration instructions, refer to the AWS documentation here: docs.aws.amazon.com/serverless-application-model/latest/developerguide/deploying-cicd-overview.html

Deeper look

Let’s examine some of the configuration and code files generated by SAM:

samconfig.toml

# More information about the configuration file can be found here: # https://docs.aws.amazon.com/serverless-application-model/latest/developerguide/serverless-sam-cli-config.html version = 0.1 [default] [default.global.parameters] stack_name = "NativeAOTExample" [default.build.parameters] cached = true parallel = true [default.validate.parameters] lint = true [default.deploy.parameters] capabilities = "CAPABILITY_IAM" confirm_changeset = true resolve_s3 = true [default.package.parameters] resolve_s3 = true [default.sync.parameters] watch = true [default.local_start_api.parameters] warm_containers = "EAGER" [default.local_start_lambda.parameters] warm_containers = "EAGER"

The samconfig.toml file allows us to configure parameters used by SAM for AWS connectivity and function deployment.

*.csproj file

This is our C# project file. Pay attention to the PublishAot attribute, which enables Native AOT compilation of our code.

<Project Sdk="Microsoft.NET.Sdk"> <PropertyGroup> <OutputType>exe</OutputType> <TargetFramework>net8.0</TargetFramework> <ImplicitUsings>enable</ImplicitUsings> <Nullable>enable</Nullable> <AWSProjectType>Lambda</AWSProjectType> <AssemblyName>bootstrap</AssemblyName> <!-- This property makes the build directory similar to a publish directory and helps the AWS .NET Lambda Mock Test Tool find project dependencies. --> <CopyLocalLockFileAssemblies>true</CopyLocalLockFileAssemblies> <!-- Generate native aot images during publishing to improve cold start time. --> <PublishAot>true</PublishAot> <!-- StripSymbols tells the compiler to strip debugging symbols from the final executable if we're on Linux and put them into their own file. This will greatly reduce the final executable's size.--> <StripSymbols>true</StripSymbols> </PropertyGroup> <ItemGroup> <PackageReference Include="Amazon.Lambda.Core" Version="2.2.0" /> <PackageReference Include="Amazon.Lambda.RuntimeSupport" Version="1.10.0" /> <PackageReference Include="Amazon.Lambda.Serialization.SystemTextJson" Version="2.4.0" /> <PackageReference Include="Amazon.Lambda.APIGatewayEvents" Version="2.7.0" /> </ItemGroup> </Project>

Function.cs

This file contains our function handler generated by SAM. It includes the entry point and handler code. Notably, it defines a partial class, LambdaFunctionJsonSerializerContext, which facilitates source generation in System.Text.Json. This is crucial as reflection is not supported by Native AOT compiled applications. Usage is straightforward: create the partial class and annotate it with any custom types used as input and output for our Lambda function.

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

using System.Net.Http;

using System.Text.Json;

using System.Text.Json.Serialization;

using Amazon.Lambda.Core;

using Amazon.Lambda.RuntimeSupport;

using Amazon.Lambda.APIGatewayEvents;

using Amazon.Lambda.Serialization.SystemTextJson;

namespace HelloWorldAot;

public class Function

{

private static readonly HttpClient client = new HttpClient();

/// <summary>

/// The main entry point for the Lambda function. The main function is called once during the Lambda init phase. It

/// initializes the .NET Lambda runtime client passing in the function handler to invoke for each Lambda event and

/// the JSON serializer to use for converting Lambda JSON format to the .NET types.

/// </summary>

private static async Task Main()

{

Func<APIGatewayHttpApiV2ProxyRequest, ILambdaContext, Task<APIGatewayHttpApiV2ProxyResponse>> handler = FunctionHandler;

await LambdaBootstrapBuilder.Create(handler, new SourceGeneratorLambdaJsonSerializer<LambdaFunctionJsonSerializerContext>())

.Build()

.RunAsync();

}

private static async Task<string> GetCallingIP()

{

client.DefaultRequestHeaders.Accept.Clear();

client.DefaultRequestHeaders.Add("User-Agent", "AWS Lambda .Net Client");

var msg = await client.GetStringAsync("http://checkip.amazonaws.com/").ConfigureAwait(continueOnCapturedContext:false);

return msg.Replace("\n","");

}

public static async Task<APIGatewayHttpApiV2ProxyResponse> FunctionHandler(APIGatewayHttpApiV2ProxyRequest apigProxyEvent, ILambdaContext context)

{

var location = await GetCallingIP();

var body = new Dictionary<string, string>

{

{ "message", "hello world" },

{ "location", location }

};

return new APIGatewayHttpApiV2ProxyResponse

{

Body = JsonSerializer.Serialize(body, typeof(Dictionary<string, string>), LambdaFunctionJsonSerializerContext.Default),

StatusCode = 200,

Headers = new Dictionary<string, string> { { "Content-Type", "application/json" } }

};

}

}

[JsonSerializable(typeof(APIGatewayHttpApiV2ProxyRequest))]

[JsonSerializable(typeof(APIGatewayHttpApiV2ProxyResponse))]

[JsonSerializable(typeof(Dictionary<string, string>))]

public partial class LambdaFunctionJsonSerializerContext : JsonSerializerContext

{

// By using this partial class derived from JsonSerializerContext, we can generate reflection free JSON Serializer code at compile time

// which can deserialize our class and properties. However, we must attribute this class to tell it what types to generate serialization code for.

// See https://docs.microsoft.com/en-us/dotnet/standard/serialization/system-text-json-source-generation

}

Summary

With the above we explored how .NET 8's native Ahead-of-Time compilation significantly improves the performance, offers a smoother user experience, potentially lower costs, and better scalability for serverless applications. The development process is streamlined with tools like AWS SAM and the .NET CLI which makes this new feature easily accessible and we eagerly await further advancements by the .NET and AWS Lambda teams.